River

Abstract

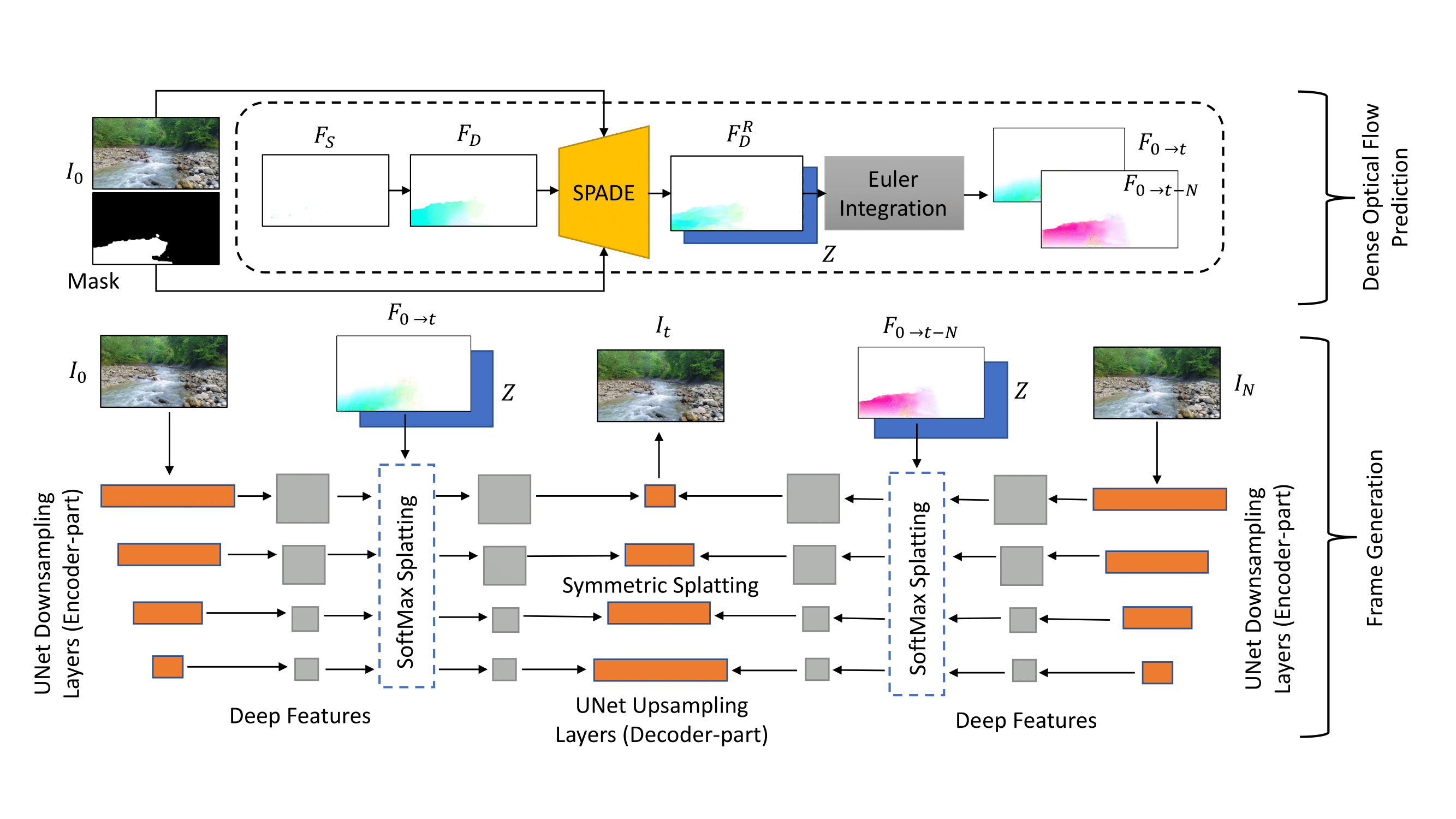

We propose a method to interactively control the animation of fluid elements in still images to generate cinemagraphs. Specifically, we focus on the animation of fluid elements like water, smoke, fire, which have the properties of repeating textures and continuous fluid motion. Taking inspiration from prior works, we represent the motion of such fluid elements in the image in the form of a constant 2D optical flow map. To this end, we allow the user to provide any number of arrow directions and their associated speeds along with a mask of the regions the user wants to animate. The user-provided input arrow directions, their corresponding speed values, and the mask are then converted into a dense flow map representing a constant optical flow map (FD). We observe that FD, obtained using simple exponential operations can closely approximate the plausible motion of elements in the image. We further refine computed dense optical flow map FD using a generative-adversarial network (GAN) to obtain a more realistic flow map. We devise a novel UNet based architecture to autoregressively generate future frames using the refined optical flow map by forward-warping the input image features at different resolutions.

We conduct extensive experiments on a publicly available dataset and show that our method is superior to the baselines in terms of qualitative and quantitative metrics. In addition, we show the qualitative animations of the objects in directions that did not exist in the training set and provide a way to synthesize videos that otherwise would not exist in the real world.

Overview Diagram

The figure shows our full pipeline. The inputs to our system are the input image, the user-provided mask indicating the region to be animated, and motion hints, FS. The motion hint is converted into a dense flow map FD using simple exponential operations on FS, which is further refined using a SPADE network, GF to obtain FRD. During test time, instead of using both I0 and IN, we obtain the tth frame, It as the output of the UNet into which we feed the input image in place of both I0 and IN and the Euler integrated flow maps corresponding to that frame, in both forward and backward directions that are used to perform symmetric splatting in deep feature space.

All Stages

The figure shows our full pipeline. The inputs to our system are the input image, the user-provided mask indicating the region to be animated, and motion hints, FS. The motion hint is converted into a dense flow map FD using simple exponential operations on FS, which is further refined using a SPADE network, GF to obtain FRD. During test time, instead of using both I0 and IN, we obtain the tth frame, It as the output of the UNet into which we feed the input image in place of both I0 and IN and the Euler integrated flow maps corresponding to that frame, in both forward and backward directions that are used to perform symmetric splatting in deep feature space.

Controllable Animation

High-Resolution (HD) Videos

Waterfall

Although the previous example video are generated at 288x512, our model can generate videos at ay resolutions. Here we show 2 video which are generated at 720x1280 resolution (HD).

Other Domains

Our method can not only generate animation for water based elements like Waterfall, Lake, River and Sea but also for other fluid elements like smoke and fire (which our model has not seen during training).

Additional Results

- Controlled Video Generation using Our Method [Link]

- Optical Flow Comparison with Controllable Baselines [Link]

- Video Comparison with Controllable Baselines [Link]

- Our-Method All Stages [Link]

- Our-Method v/s Holynski et al. Videos [Link]

- Additonal Results on Mask Variations and Different Domains [Link]

BibTeX

@inproceedings{mahapatra2021controllable,

title={Controllable Animation of Fluid Elements in Still Images},

author={Mahapatra, Aniruddha and Kulkarni, Kuldeep},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2022}

}Acknowledgements

Special thanks to Gaurav Sinha and Simon Niklaus.

Website adapted from the following template.